Monitoring jobs’ performance

For all Hamilton jobs, whether initiated from the command line (sbatch, srun) or through the portal, the portal’s performance monitoring feature lets you view how they use the resources they have been allocated. This can help you to:

- Check if your jobs are performing as expected. For example, is a multi-core job making good use of the CPU cores allocated?

- Investigate the time profiles of your jobs’ CPU, memory and TMPDIR usage or their I/O activity.

- Check if similar jobs could be allocated fewer resources without affecting performance. For example, how much of the allocated memory do your jobs use?

Performance metrics become available a few minutes after a job has started and they remain visible until the job has finished. (In the future we hope to extend the facility to jobs that have ended.) To view the performance metrics:

- From the dashboard, select Jobs->Active Jobs from the top menu

- If you wish, sort the list by clicking on a column heading or filter the list of jobs.

- Click on the arrow next to a job you wish to inspect.

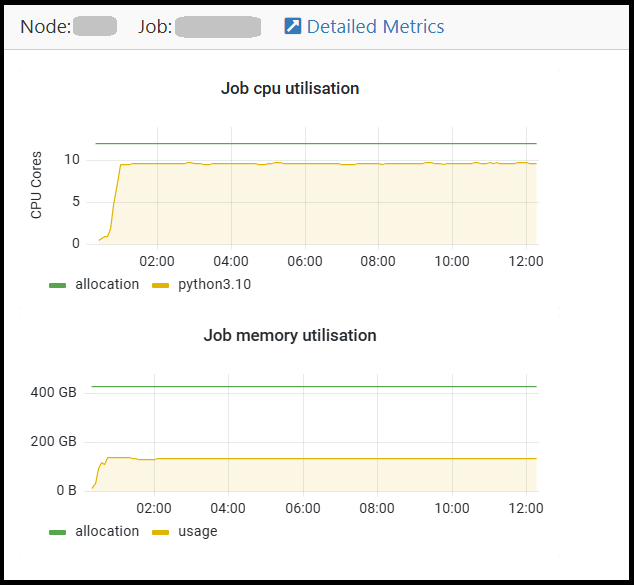

Job metrics are displayed on two pages. The initial page has information about the job’s Slurm request, plus graphs showing its CPU and memory utilisation through time. For jobs that are spread across multiple nodes, results are shown for each node separately. In the example below, a single-node job is using its CPU allocation reasonably well but could perhaps have requested less memory.

To see further usage information, click on Detailed metrics just above the graphs.

The Detailed Metrics page displays additional graphs that show:

- CPU and memory utilisation

- TMPDIR usage

- /nobackup bandwidth and file operations

There are also graphs showing GPU utilisation, memory utilisation, occupancy and bandwidth. These are currently applicable only to some private nodes.

/prod01/prodbucket01/media/durham-university/external-location-photography-/city-shots-/82922-1920X290.jpg)